Mobile applications continue to evolve, moving beyond simple touch interactions toward more natural and intuitive ways for users to engage. Voice and gesture interfaces are emerging as transformative technologies, reshaping how people interact with their devices. Offering hands-free control and immersive experiences, they are quickly becoming essential features in modern apps. Custom mobile application development services increasingly integrate voice commands and gesture controls to meet rising user expectations and deliver smarter, more accessible, and efficient solutions. Merging these technologies elevates user engagement, simplifies navigation, and expands usability across diverse environments and user groups. In this blog, we will discuss the core concepts behind voice and gesture interfaces, their importance, benefits, challenges, and best practices, along with real-world applications and future trends shaping mobile app development.

Understanding Voice and Gesture Interfaces

Voice interfaces allow users to interact with applications through spoken commands. These systems rely on advanced speech recognition, natural language processing (NLP), and artificial intelligence to accurately interpret voice inputs. Through voice-controlled apps, users can search for information, control smart devices, dictate messages, or navigate complex functions without needing to touch their screens. Technology is becoming increasingly intelligent, with a better understanding of accents, context, and user intent.

Gesture interfaces detect and interpret physical movements such as swipes, taps, and waves as input commands. Using sensors like accelerometers, gyroscopes, and front-facing cameras, gestures are translated into navigational or functional actions within the app. The approach enables users to engage with mobile apps more naturally, especially in scenarios where traditional input methods are less effective. Both interfaces aim to improve accessibility and enrich the overall mobile user experience.

Why Voice and Gesture Interfaces Matter in Mobile Apps

User interaction in mobile apps must evolve to match the needs of modern life. People often use apps while multitasking, driving, or dealing with other constraints. Voice and gesture controls offer intuitive and efficient solutions in such contexts. Hands-free functionality enables safer use while performing other tasks and supports accessibility for users with physical challenges.

Developers gain the opportunity to expand app functionality into wearable devices, smart home ecosystems, and AR environments. Apps that integrate voice and gesture commands are more inclusive and interactive, often resulting in better user retention and satisfaction.

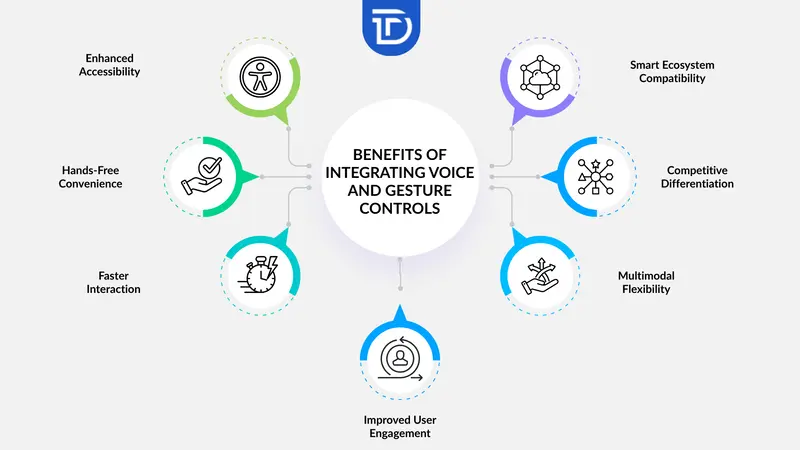

Key Benefits of Integrating Voice and Gesture Controls

Voice and gesture features are transforming mobile app experiences by making them faster, easier, and more inclusive. These controls simplify interaction, reduce friction, and adapt to how users naturally behave. Whether used in daily tasks or smart environments, they bring real value to modern mobile apps. Below are the key benefits of adding voice and gesture controls:

Enhanced Accessibility

Voice and gesture interfaces empower individuals with visual, motor, or cognitive impairments to use mobile apps without relying on traditional touchscreens or keyboards. An inclusive design approach allows more users to benefit from your app, opening up accessibility and increasing user satisfaction across a broader, more diverse audience.

Hands-Free Convenience

Voice and gesture commands become ideal when users are multitasking. Cooking, driving, working out, or performing other hands-on tasks no longer prevents interaction. People can engage with the app without touching the screen, making real-time actions safer, more efficient, and far more convenient in everyday settings.

Faster Interaction

Spoken commands or simple gestures often complete tasks more quickly than navigating through multiple screens. Time is saved when users skip lengthy steps, creating a seamless and smooth app experience. Shorter interaction time results in improved usability and supports productivity across various user scenarios.

Improved User Engagement

Interactive elements such as gestures and voice commands make apps feel more responsive and intuitive. Users enjoy experiences that mirror natural communication, which increases emotional connection to the app. Higher engagement leads to longer sessions, repeat usage, and a deeper sense of satisfaction from using the app.

Multimodal Flexibility

Offering voice, gesture, and touch controls in parallel allows users to choose the most suitable option depending on context. Some may prefer to tap, while others speak or move. Flexibility leads to greater personalization and ensures the app adapts to each user’s environment and comfort level.

Competitive Differentiation

Brands that integrate voice and gesture controls often stand out as early adopters of innovation. Forward-thinking technology signals quality and progression, appealing to users seeking modern, dynamic digital products. Differentiation becomes easier in saturated markets where users are constantly comparing app capabilities.

Smart Ecosystem Compatibility

Smart homes, wearable tech, and connected devices frequently use voice and motion-based interactions. Apps that support these methods work well within broader tech ecosystems, offering users continuity across platforms. Compatibility with modern devices also boosts long-term relevance and encourages broader adoption.

Challenges in Implementing Voice and Gesture Interfaces

Voice and gesture interfaces bring futuristic flair to mobile apps, but building them is not always straightforward. Developers must balance innovation with usability, accuracy, and privacy. As expectations for hands-free experiences rise, so do the challenges in delivering them effectively. Below are the key hurdles faced when implementing voice and gesture interfaces in mobile applications:

Recognition Errors

Voice and gesture recognition technologies still face challenges with accuracy. Variations in speech patterns, accents, background noise, poor lighting, or unconventional movements can result in misinterpretations. Continuous calibration, testing, and the use of machine learning are crucial to improve precision and ensure users have a reliable and frustration-free experience across different environments.

Security and Privacy Risks

Collecting voice and motion data introduces serious concerns around privacy and security. Sensitive user data must be encrypted, stored securely, and used responsibly. Developers must follow strong compliance standards, be transparent about data usage, and give users control over permissions and preferences to build trust and avoid potential misuse or legal issues.

Complex Development

Implementing gesture and voice features requires specialized development skills, integration of third-party APIs, and deep platform-level knowledge. Ensuring these interfaces function smoothly across devices, operating systems, and screen sizes adds complexity. Testing becomes more extensive, and debugging issues tied to hardware or environment can lengthen the development timeline significantly.

User Education

Many users are unfamiliar with using voice or gesture commands within apps. If there’s no guidance, adoption may lag due to uncertainty or misuse. Introducing interactive tutorials, tooltips, or simple onboarding flows can help bridge the knowledge gap and encourage users to explore and embrace the features with greater confidence.

Contextual Limitations

Interpreting what a user truly means through voice or gesture can be tricky without context. A single word or movement might mean different things depending on timing, location, or screen state. Limited contextual understanding in current systems can cause errors or confusion, making context-aware AI a necessary but still evolving solution.

Best Practices for Custom Mobile App Development with Voice and Gesture Features

Creating voice and gesture features for custom mobile apps requires more than just technical skill. A thoughtful approach ensures these controls truly enhance the user experience without adding complexity or frustration. Developers must consider real-world usage, privacy concerns, and diverse device capabilities to deliver smooth, intuitive interactions. The following best practices help guide effective integration of voice and gesture controls into mobile applications.

Focus on User Intent

Voice and gesture controls should reflect real user needs, making tasks easier, not harder. Designing intuitive commands that match common actions helps users navigate the app naturally and improves overall satisfaction with the experience.

Use Reliable Libraries and Frameworks

Selecting trusted SDKs and APIs is crucial for accurate recognition and compatibility. Tools like Google’s ML Kit, Apple’s Vision framework, or Amazon Alexa SDK provide a solid foundation for building dependable voice and gesture features.

Prioritize Privacy and Consent

Collect only essential data and always obtain user permission. Transparency about how voice and gesture data is recorded and used builds trust and ensures compliance with privacy regulations.

Design for Real-World Environments

Voice and gesture inputs must perform well despite background noise or poor lighting. Testing under real conditions guarantees the app remains functional and reliable whenever and wherever users need it most.

Provide Feedback

Offering clear visual or audio cues confirms when commands are successful or if errors occur. Timely feedback helps users feel confident interacting with voice and gesture features and reduces frustration.

Offer Alternatives

Providing fallback controls such as touch buttons ensures everyone can use the app comfortably. Not all users will want or be able to rely on voice or gestures, especially in public or quiet settings.

Test Across Devices

Consistent performance across a wide range of devices is essential. Whether on budget Android models or flagship iPhones, voice and gesture controls should work smoothly and responsively to avoid user dissatisfaction.

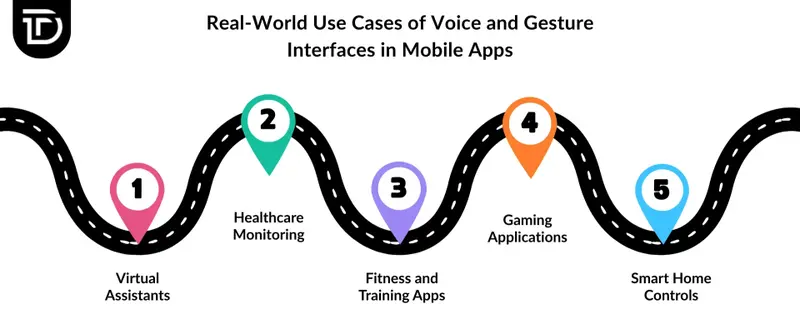

Real-World Use Cases of Voice and Gesture Interfaces in Mobile Apps

Voice and gesture interfaces are transforming how users interact with mobile apps, offering faster, hands-free, and more intuitive control. Their growing role across industries highlights their value in enhancing user experience and accessibility. Below are real-world use cases where these technologies create meaningful impact:

Virtual Assistants

Voice-powered assistants like Alexa, Google Assistant, and Siri manage tasks, answer questions, and control smart devices. These interfaces show how voice commands streamline everyday activities, making mobile apps more convenient and hands-free for users.

Healthcare Monitoring

Gesture recognition supports physiotherapy exercises while voice commands assist with symptom tracking and medication reminders. These features enable hands-free, accessible healthcare management, especially helpful for users with mobility challenges or those needing easy interaction with health apps.

Fitness and Training Apps

Gesture tracking monitors workout form and counts repetitions, while voice commands allow users to navigate routines or switch exercises without interrupting their flow. This combination creates a smooth, hands-free fitness experience that keeps users focused and motivated.

Gaming Applications

Motion detection and voice controls let players interact naturally, controlling characters and making selections through movements and speech. This immersive approach enhances gameplay by offering a more engaging and intuitive experience beyond traditional controls.

Smart Home Controls

Mobile apps use voice commands to manage lighting, thermostats, and appliances, while gestures provide alternative control options. Gesture control proves valuable in noisy environments where voice recognition may struggle, ensuring users maintain seamless control over their smart homes.

Future Trends in Voice and Gesture Interface Technologies

Machine learning and artificial intelligence will continue refining how mobile apps understand voice and motion. Improved NLP will allow for more contextual, human-like conversations, while advanced gesture tracking will recognize subtle movements and emotional cues. Multilingual support will also become more robust, making voice apps more accessible globally.

As AR and VR technologies grow, immersive interfaces will become essential. Expect mobile apps to blend voice and gesture with spatial computing, creating interactive 3D environments. Developers will focus on hybrid interfaces that shift seamlessly between voice, gesture, and traditional touch depending on the user’s environment or task.

The Bottom Line

Voice and gesture interfaces are revolutionizing the way users interact with mobile apps. These technologies offer hands-free access, improve accessibility, increase engagement, and open the door to new forms of interaction. Challenges exist but are solvable with thoughtful design, privacy safeguards, and robust testing. Businesses looking to stay ahead should explore how these interfaces can transform user experiences and elevate app performance.

At Dreamer Technoland, we specialize in custom mobile app development with advanced voice and gesture control integration. Our team harnesses progressive technologies to build intuitive, high-performance apps tailored to your business needs. Every project is led by a dedicated project manager to ensure smooth execution and clear communication. We also offer three months of free post-launch support, making sure your app runs flawlessly after release. Choosing us means partnering with a team focused on innovation, quality, and long-term success.